Diving into vibing

Blog: 10-Nov-2025

I've got that Bee Gees song in my head: "v-v-v-vibe coding, you're telling me lies...". Or something like that.

I had briefly mentioned vibe coding in The last developer to use AI but thought nothing more about until it Moonshot AI released Kimi CLI, which natively supports ACP. That means that my code editor Zed can now access Moonshot AI's large language models more effectively. I talked about Kimi K2 in the article above, but for those not familiar with it, Moonshot AI claims that "Kimi K2 is our latest Mixture-of-Experts model with 32 billion activated parameters and 1 trillion total parameters. It achieves state-of-the-art performance in frontier knowledge, math, and coding among non-thinking models.".

And it's surprisingly cheap to access via the Kimi CLI. For occasional users like me who neither want nor need a monthly AI subscription, a cheap pay-as-you-go model works really well. How cheap? Well, I've used it to develop a small Mac desktop app and a small Windows desktop app, a small website, and several versions of the app discussed here, plus various bits of of refactoring other stuff, and general code Q&A. And it's cost me $4.07 (£3.09) so far. Here in the UK you would pay more than that in Starbucks just to buy a coffee.

You could already access Kimi K2 in Zed via the older OpenAI-compatible API method (that's how I was using it in the previous article). But using Kimi K2 via ACP seems to produce better results. Maybe the Kimi CLI has more fine-tuned model settings or maybe it's handling context more effectively. Or maybe I'm just wrong, but it certainly feels like it's generating more comprehensive responses. Not very scientific, I know, but that's definitely my first impression.

But anyway, the upshot is that it generates impressive production-ready code with full documentation, tests, and reiterative compilation and debugging. So I got to thinking about vibe coding again and wondered if it was time to try it out for myself.

First though, here's what the guy who invented the term said when he invented the term:

There's a new kind of coding I call "vibe coding", where you fully give in to the vibes, embrace exponentials, and forget that the code even exists. It's possible because the LLMs (e.g. Cursor Composer w Sonnet) are getting too good... more here

– Andrej Karpathy, Twitter, 11:17 PM · Feb 2, 2025

I don't think anyone other than Andrej (who has a brain the size of Jupiter) quite knows what he means by "embrace exponentials", so I asked my local image generator for its opinion by prompting it for an image of "a man with a really large brain embracing exponentials". Here what it gave me:

So now we know. But the rest of it is pretty clear: I should try to develop without even thinking about the

code, and certainly not looking at it. The whole notion feels like something from a plot line in a 1960s sci-fi

movie where all the actors have 1960s haircuts and wear tightly-fitting clothing for no obvious

reason—other than to display the 1960s actresses' bodies in a manner which the 1960s male audience

didn't mind at all, not even a little bit.

So all I need to do is just type into the prompt and let the LLM figure it all out. I would have tried speaking into the prompt like Andrej did to make it even more '60s sci-fi, but I haven't yet found a voice-to-text converter which works 100% reliably (maybe it's just my accent). And also I would get an unacceptable amount of ridicule and abuse from my wife—she'd post a video of me doing it (and repeating it, and swearing at it) on the family WhatsApp group so that the rest of them could make fun of me too. Such is the heavy burden of a family man.

But which model to choose? Andrej co-founded OpenAI, so presumably his piggy bank can stretch to a top-end model subscription from the likes of OpenAI or Anthropic. But down here in the shallow end of the gene pool, we ordinary folk only have dead moths in our piggy banks, so I'll be sticking with Kimi K2.

Finding something dull to vibe on

So I thought about what would make an ideal target for vibe coding, and came up with a list of criteria. It should be a tool which:

- has no security implications (i.e. no access to confidential data)

- is easy to describe in the prompt

- is self-contained with a clear purpose

- is easy to test

- makes your life easier in a small way

- doesn't really matter if it fails miserably

- is too boring to hand-code voluntarily

I think the last point is key; if it was interesting and fun to do, I would probably have already written it. Then it struck me: Much as I enjoy making the stupid images to accompany my blog posts, one small niggle is that I have to convert each PNG image produced by my image generator into much less weighty JPG and WebP copies for use here on the site. Why both types? Because modern browsers support the WebP format which is lighter and faster to download than JPG images. But older browsers only support JPGs. So the HTML people came up with a clever scheme to use both, which you can see if you do a "view page source" in your browser on this very page. So everybody is happy except for the poor bugger who has to convert all his PNGs to WebP and JPG.

Of course, there is a plethora of image conversion tools out there, all of which you have to (i) find, and (ii) learn. So I started off using GIMP, but it's a big beast, and I get lost in the menus and options. So then I tried various freebie online sites, but most of them want to suck out every detail of your life to share with their hundreds of "trusted partners". I feel dirty every time I use a site like that.

So i thought: Why not write my own? Now I had my goal: a super-easy command line image converter. It met all of my criteria and would be fun to try out vibe coding on. Here's how it went...

Vibing!

The first prompt is from memory, but the rest are exactly as logged in the AI history, including my rushed typos which Kimi had no problems understanding.

(There are a few mentions of crates in the text below. For those unfamiliar with the term, it's the name for a collection of code in the Rust language which can either be compiled into an executable, or, as in the cases below, into a code library which can be imported and used within your own code to add new features. Think of a crate as being like adding a friend's fruit collection to your eggs, flour, etc. so that you can bake a fruit cake. Without their fruit you'd still have a cake, but it wouldn't be a fruit cake.)

| Prompts followed by comments: |

|---|

Create a simple Rust command-line application which converts PNG images to both JPG and

WEBP formats and converts JPG images to WEBP format, It should accept only a PNG or JPG file

name as input and have no other options |

| Kimi wrote the code, did a build, made some corrections for some build errors, then completed the job with an excellent descriptive README. It worked as described. So then I thought: why restrict it to PNGs and JPGs?... |

alter the application so that it can convert any image type supported by the image create

into jpg or webp |

No sweat. It read the image crate documentation (despite my typo) and the existing

code to get a list of supported image types. Then it wrote code to let the image

crate handle format detection. Then it looked to see if I had imagemagick

installed to make some test images. I didn't, so instead it wrote a Rust binary to generate its

test images! I watched it create several test images, build, test, adjust the code, and rebuild

until it was happy. Then it updated the README accordingly. But then I realised that the

image crate it used doesn't support HEIF/HEIC images which are now the default

on Apple devices, so I suggested... |

can you add the ability to convert .HEIF and .HEIC images in the same way as the

others |

| This was a struggle as there is no easy way to do this across all platforms currently. Kimi tried several crates and ended up with a hybrid approach setting an optional build flag so users could enable HEIC support by doing a rebuild if they had the required system libraries installed. This was too messy for my taste, so I dropped HEIC support (also because Apple devices can easily export HEIC files as JPGs by simply copying them from the Photos app to the Files app). |

please remove the heif /heic conversion feature, the app is now too complicated |

Kimi responded with: "You're absolutely right! Let me simplify the application by removing

the HEIF/HEIC conversion feature and reverting to the clean, simple version". So it did

just that and updated the README accordingly. It claimed that the WebP conversions were

lossy, which is what I wanted, so I took that at face value initially. So I asked it to

produce some stats for the README... |

The application is for website image use, so the default lossy settings are fine. Please

add a comment in the README briefly explaining the default lossy percentage figures for jpg and

webp and explain how they are suitable for website use |

This is where it got interesting. Kimi read the code and decided that the WebP

conversions were definitely lossy, and did some tests claiming to back that up. But the WebP

files were suspiciously large, and I became convinced this was in fact a lossless conversion.

So I read the image crate docs which confirmed that this crate was in fact doing

a lossless WebP conversion. So I installed imagemagick and challenged Kimi... |

The webp files are very large. You can make some test images with Imagemagick to prove this.

The docs

for the image crate state that the webp conversion is lossless which explains the large file size.

Is there a way to have a lossy conversion to redcue the file size? |

And it replied with "You're absolutely right! I checked the image crate documentation and it

confirms that `WebPEncoder::new()` creates a **lossless** encoder, which explains the large file

sizes. Let me create some test images with ImageMagick to demonstrate this and then implement a

proper lossy WebP encoder". So it went searching for another crate, found one, installed it,

wrote more code, tested it with some more images that it made using imagemagick this

time, changed some compression settings, did some more rebuilds and tests, and finally got it

working over about 5 minutes of work. Then it updated the README with the tested compression stats.

But then I thought: why not have it do bulk file selections as well? So I asked it... |

Can you adapt it so that bulk conversions can be made using a wildcard such as *.png |

It replied "Great idea! I'll adapt the application to support wildcard/bulk conversions. Let

me modify it to accept multiple files and process them all." So it added the glob

crate and wrote the new code, added more test images, wrote some error handling to detect

invalid paths or failures to convert, tested it comprehensively, and updated the README with

numerous command line examples of how to select single or multiple files, including wildcards and

even bash brace expansion, which I thought was pretty cool, if a bit niche. But it was streaming

out 3 lines of confirmation or error messages for each file it processed which was a bit too wordy

for me. So I asked it... |

I want to make the output less verbose. Change the output so thaat lists the only the

converted files but only counts the unsupported file formats, with conclsuin at the end

saying, e.g., converted 9 of 17 files, ignored 7, |

It took on board my crappy typos, and said "Great idea! I'll make the output less verbose

by showing only converted files and providing a summary at the end. Let me modify the output

logic". So it did the amend/build/test/rebuild loop a few times until it was happy.

Then it concluded with the rather boastful statement: "The application now provides a much

better user experience for bulk conversions while maintaining all the excellent compression

performance!". Then it updated the README in a cloud of self-satisfaction, telling the world

how good the application was. Job done.

|

Conclusion

That was a blast! So what did I learn?

- vibe coding is a thing, at least for small apps like this

- LLMs like Kimi K2 are amazing

- LLMs are not yet perfect

In case anyone objects to my ad-hoc and very reactive style of vibe coding, I do of course realise that I could have sat down, thought for a while, and properly condensed all the features I wanted into one good, all-encompassing prompt. But life isn't like that, as anyone who has ever been to a customer demo meeting knows. You've just spent a fortnight writing the new feature they wanted, the full details of which were thrashed out minutely at great length. You demo it. Their response is always "yeah, that's great, but can you just...". So my vibe coding was acting like a real customer, just making changes as I went along. And Kimi coped with that just fine.

It's impossible to get a mile-high overview of a disruptive fast-moving target like this, but there's no doubt that LLMs have already up-ended coding in a big way. But as of late 2025 they still need the firm hand of a knowledgeable developer on the tiller. But what happens when the models get better, smarter, and we get a generation of developers who have never used anything else? Will that even matter by then? Since the dawn of programming, greybeards have always claimed that the latest new abstraction will kill the profession. They said it about the jump from assembler to C, then from C to VB/Delphi, then from desktop to web apps, where each jump took us further away from the underlying hardware. Until now, when we're using English as a programming language—the highest level of abstraction we've ever had. But we're all still here, writing stuff that people are using. Maybe the next generation will become orchestrators rather than being down there in the dirt. No-one knows yet, and that's the exciting part. Wild times baby, and there ain't no going back.

Postscript: Stop the press!

I had just put the finishing touches to this article when I got an email from Moonshot AI announcing a big price drop to the Kimi K2 Thinking model, now down to the same price as the Kimi K2 model I was using above. I couldn't resist that, so I loaded up Kimi K2 Thinking into Kimi CLI and kicked off the project once again with a brand-new prompt that was even more slack and open-ended than the the first one:

| Prompts followed by comments: |

|---|

how would you write a rust cli to accept image files, also wildcards like *.png as

input, and create a copy of each image in web-optimised webp and formats |

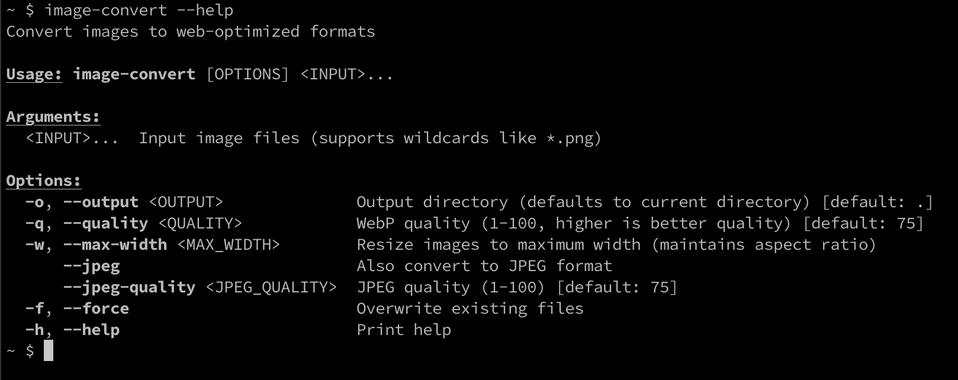

| I was expecting a summary of how to write it, not to actually write it, but it did that thinking aloud thing, then wrote a much better version of the app than the non-thinking version. As before, it created test images and tested its code against them. It also added (unprompted by me) some extra optional features to re-size an image, alter the compression quality, pick a target directory, and force overwrite existing files. None of these got in the way of the prime function to create a WebP. Only this time it made the JPG creation optional by adding a --jpeg switch. And it wasn't overly verbose in output messages this time. And it just had better logic all round. Plus it got the lossy compression issue right first time. But for some reason it made 85% the default quality which results in quite large file sizes. So I asked it... |

Why did you choose a default 85% compression bearing in mind this is for use

on web sites |

| So it gave me a big spiel comparing various compression sizes, and defended its choice by saying that 85% was the conservative, safe option. But it did admit that 75% was a better default option for general website use, so I asked it... |

Change the default compression to 75% and explain this in the README |

| So it made the change, wrote CHANGES.md, and wrote a big section in the README comparing various compression sizes, and explained why 75% was the optimal default for website use. The app has 218 lines of well-commented, easy-to-read code. And this time the README had none of the over-the-top enthusiasm that the original version had. |

So I can confidently say that the Kimi K2 thinking version is a big step up from the (still very good)

non-thinking version. And here's the result of running

image-convert --help:

The improved version is on GitHub as Image-convert if you want to see it for yourself.

The only thing I changed throughout the whole process was to add an Acknowledgements section at the end of the README to give credit where credit was due. And I changed the name it gave itself (image-optimizer) to image-convert to avoid having to remember whether it was optimizer with a z (USA style) or optimiser with an s (UK style).

This stuff is seriously unreal. My mind is already buzzing for the next project. This isn't just an improvement in how we code, it's a revolution.